A precision agriculture startup has developed a series of algorithms in R which it combines in various configurations to consume and process satellite imagery and soil sample datasets. The company is building its own web-based SaaS user interface, and needs to automate its data processing algorithms in the cloud so that they can be triggered from the web application. The company cannot expose its IP (algorithms) outside of its own cloud account, and needs to launch quickly and without spending millions of dollars on DevOps and data engineering.

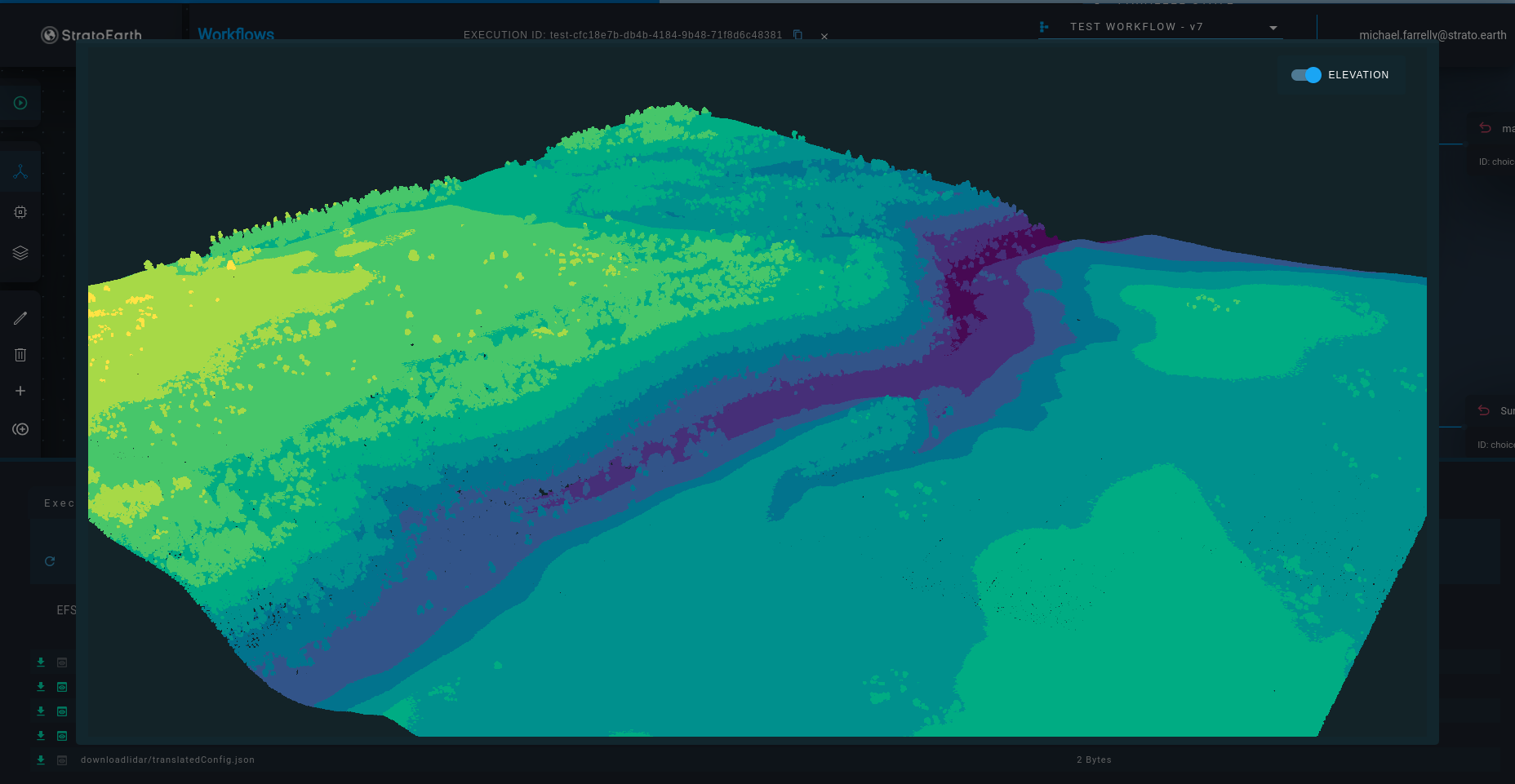

An engineering firm prepares for its infrastructure projects by creating 3D visualizations and geomatic analytics of project sites based on LiDAR datasets. The firm has developed its own range of python algorithms for processing the LiDAR, and is now looking for a scalable, rapid and cost-effective way to automate these LiDAR processing workflows in the cloud.

Both organizations can use the Strato Workflows Github integration and drag & drop dashboard to Dockerize and transform their data processing algorithms into automated cloud-based workflows in a matter of days, and without exposing their IP or data. The workflows can be triggered via a RESTful API request (such as from the precision agriculture firm's custom UI), or manually via the Strato Workflows dashboard. The outputs of the workflows can be automatically placed in a designated AWS S3 bucket or written to a database.

Reduced complexity & engineering hours: The Github integration and drag & drop dashboard allows the data scientists who wrote and understand the algorithms to build and maintain the automated workflows themselves. This reduces the lenghty, error prone processes of communication and verification between data science and DevOps/data engineering teams.

Speed: Because Strato Workflows provides industry-grade cloud infrastructure and CI/CD out of the box, and allows data scientists to build workflows with a simple drag & drop interface, companies are able to acheive automation in days and weeks instead of months and years.

Best practices & no vendor lock: Strato Workflows enforces software development best practices, such as version control (Github) and containerization (Docker). Workflows themselves are exportable as Amazon States Language JSON files, and can be re-imported for use directly in the AWS Step Functions console.

Security & IP protection: Strato Workflows is launched in your organization's own AWS account (to protect your IP), with expert security configuration implemented via AWS services such as Cognito, VPC, IAM and Systems Manager.

Reduced cloud fees: No additional cloud fees are added on top of your organization's AWS bill, and moreover, Strato Workflows helps to decrease and monitor your cloud fees via use of serverless architectures, billing alarms, and automated compute pricing estimates.

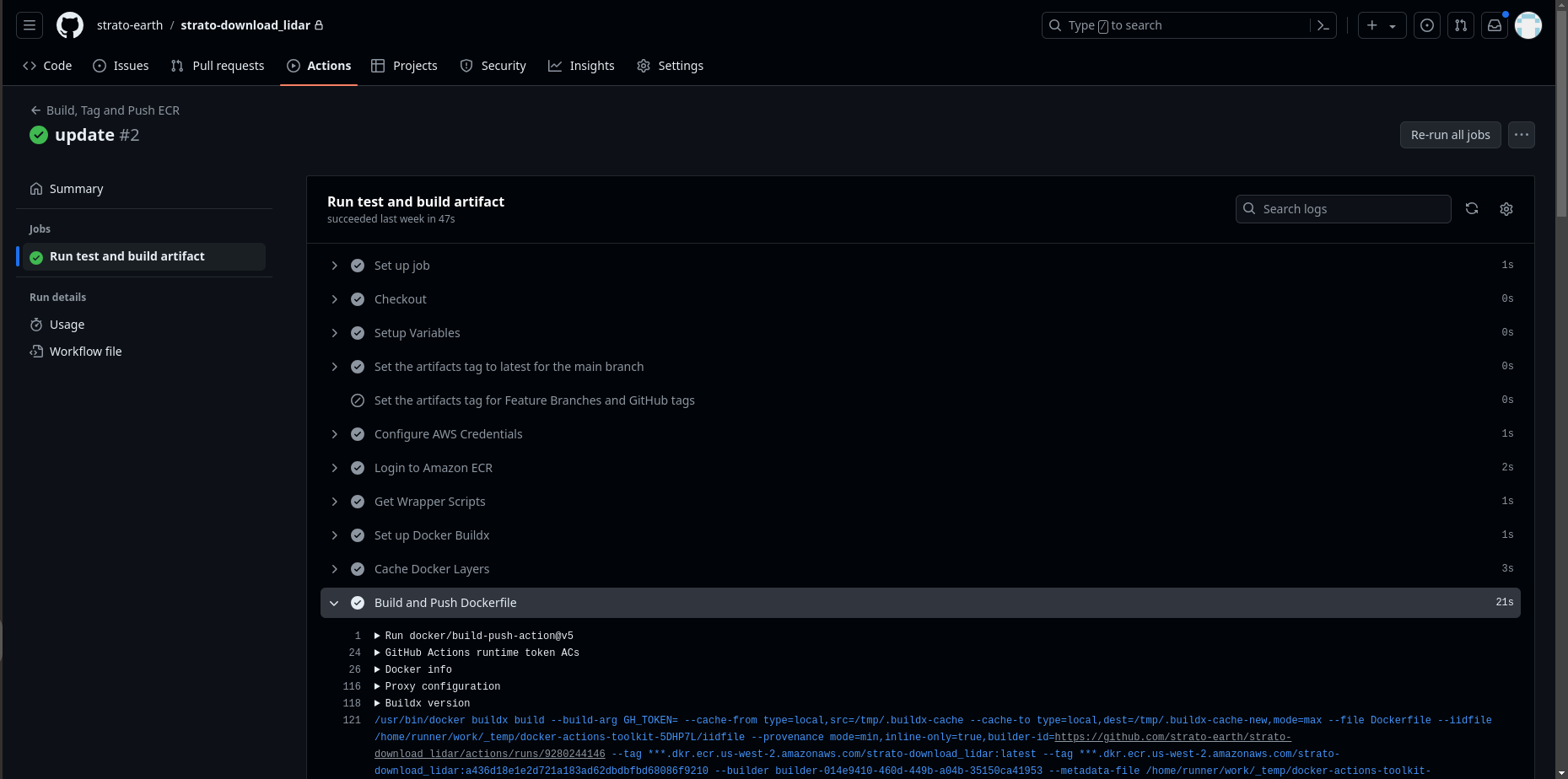

The Github integration is a CI/CD script which runs in the Github Actions of your organization's selected Github repositories. We provide a script for users to run in their machine's terminal. The script will add Strato Earth's CI/CD script to an existing repository, or spin up an entirely new repository in your organization's Github account.

The repository created by the script includes a Dockerfile boilerplate and Github Actions YAML file. Users may add data processing algorithms written in any language to the repository.

When a commit is made to a desgnated branch, the repository's Github Actions are triggered, generating and pushing an updated Docker image to the Elastic Container Registry of the organization's AWS account. The Github Actions typically take only 1-2 minutes to run, allowing users to iterate rapidly and quickly see the results of code change in workflows.

The Github integration itself is an industry-grade CI/CD system. Beyond creating updated Docker images of your Github repository, it runs static code and security analysis.

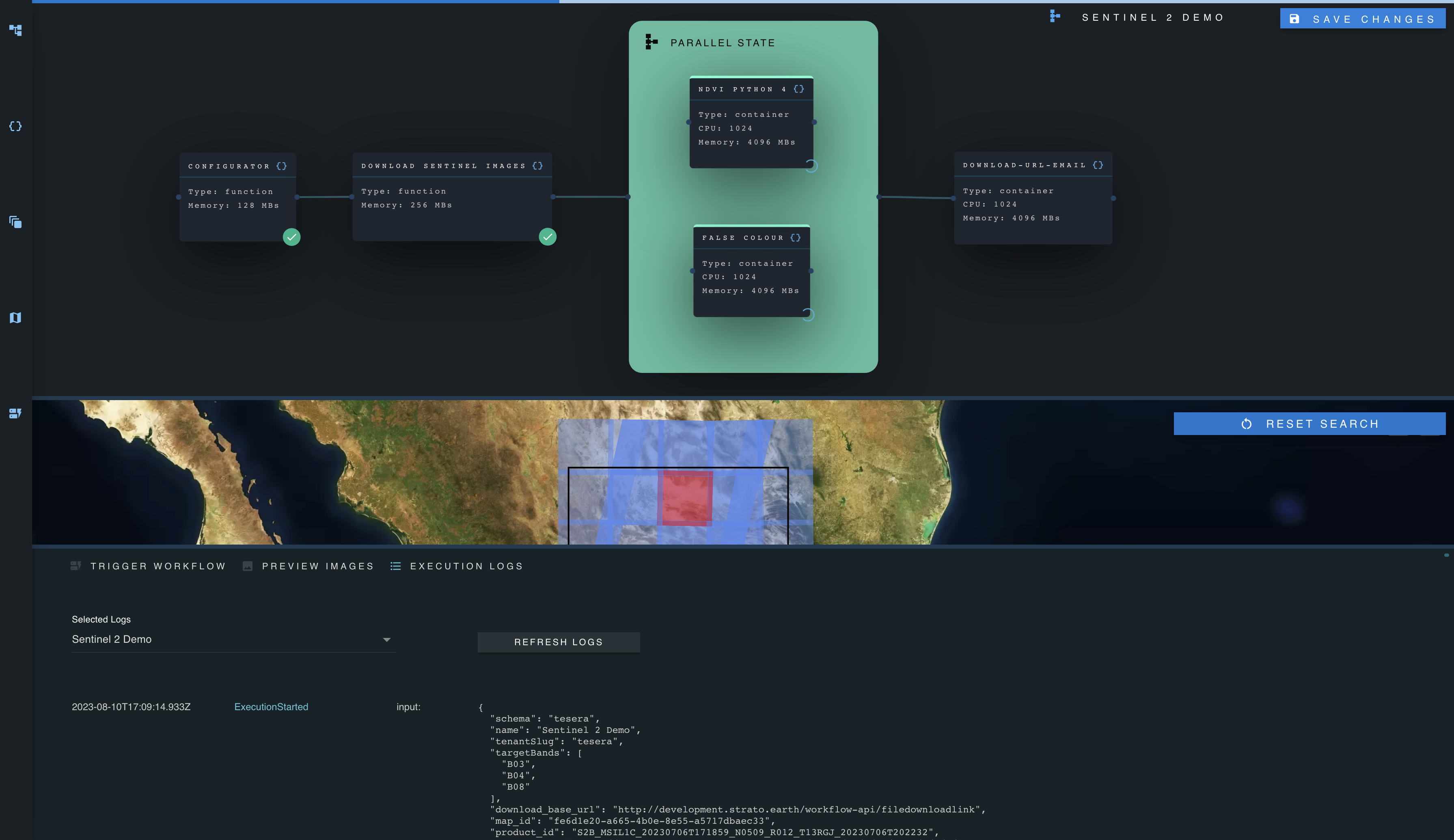

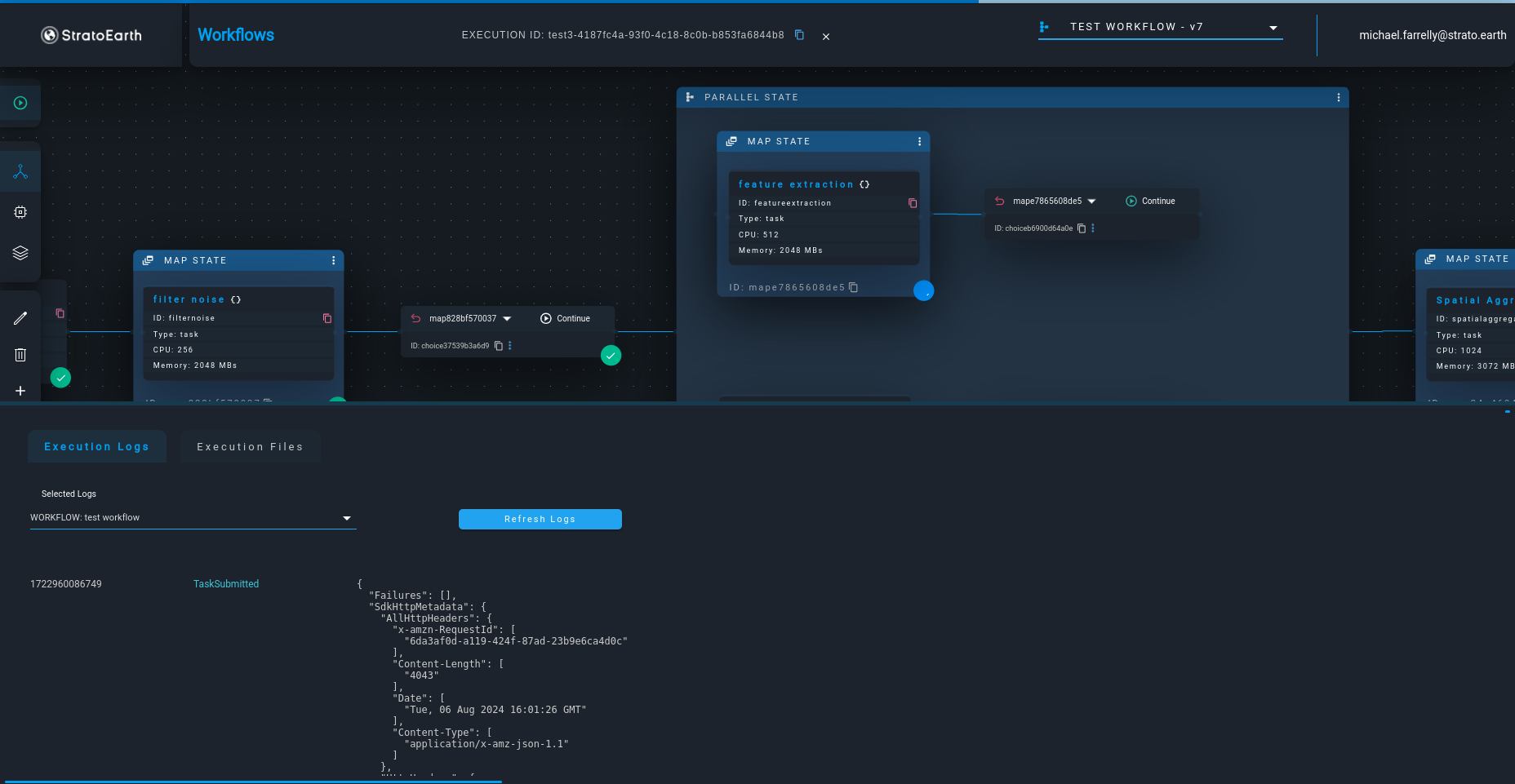

In the dashboard, users can build serverless compute tasks out of their Docker images and drag, drop and connect them together to create workflows.

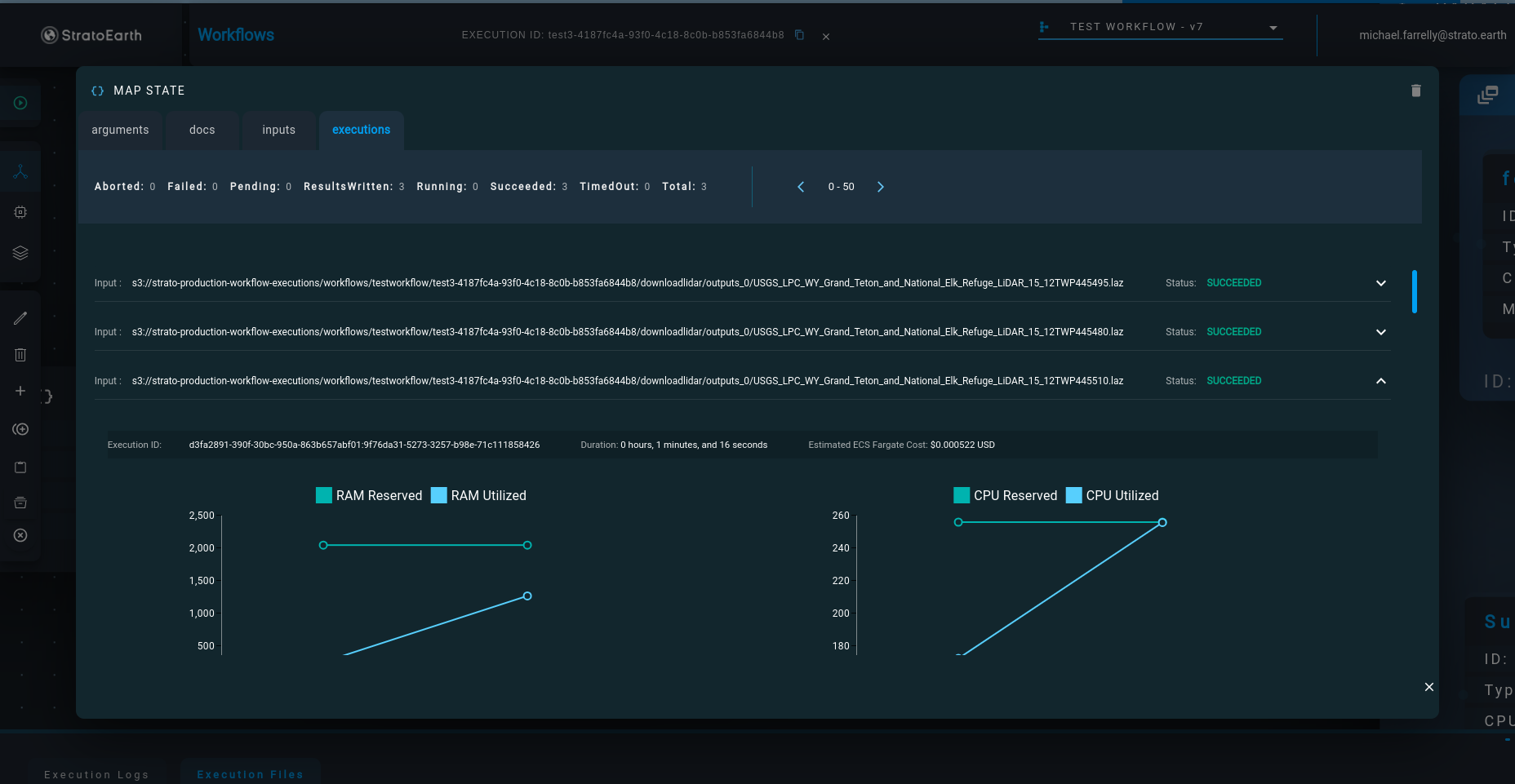

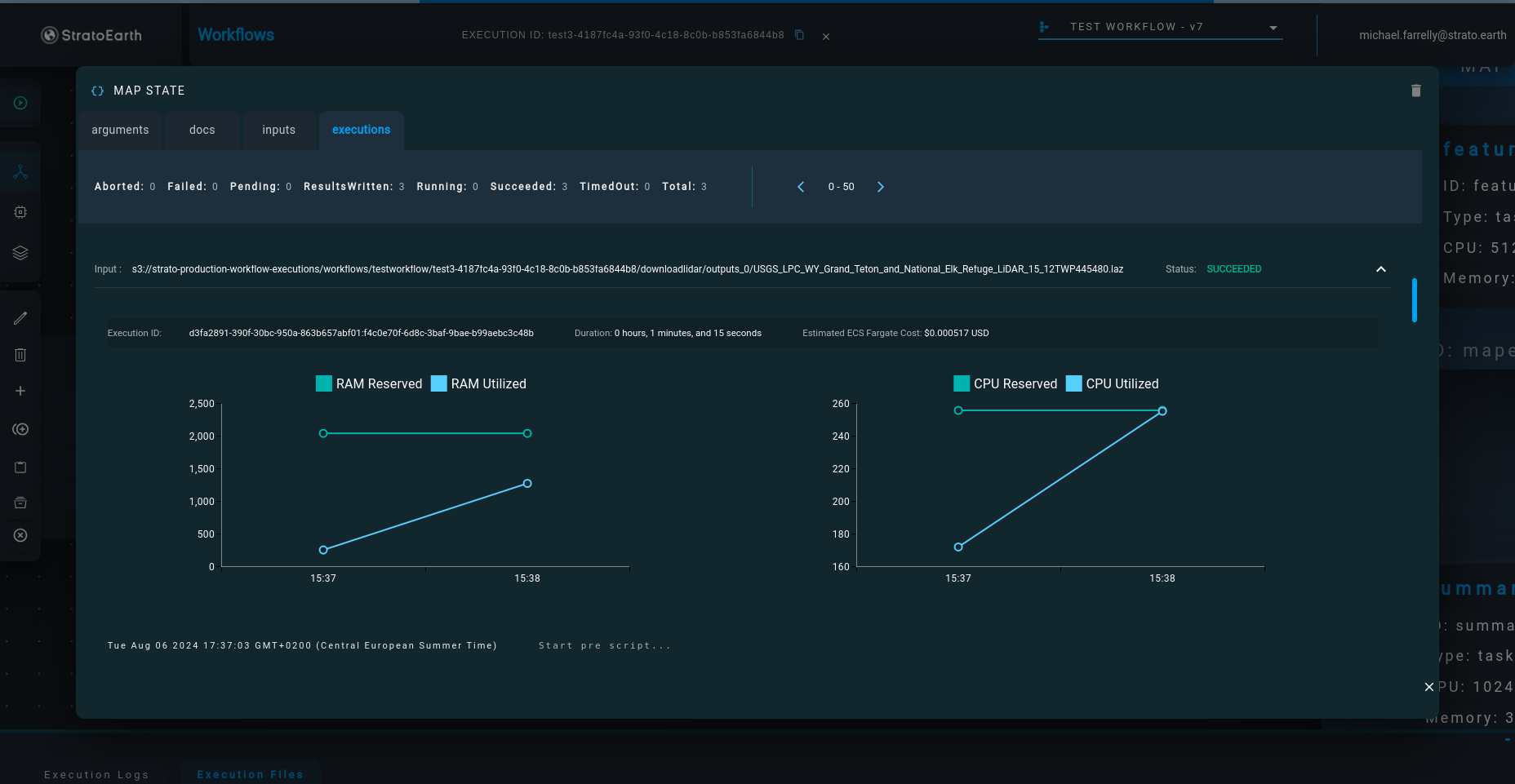

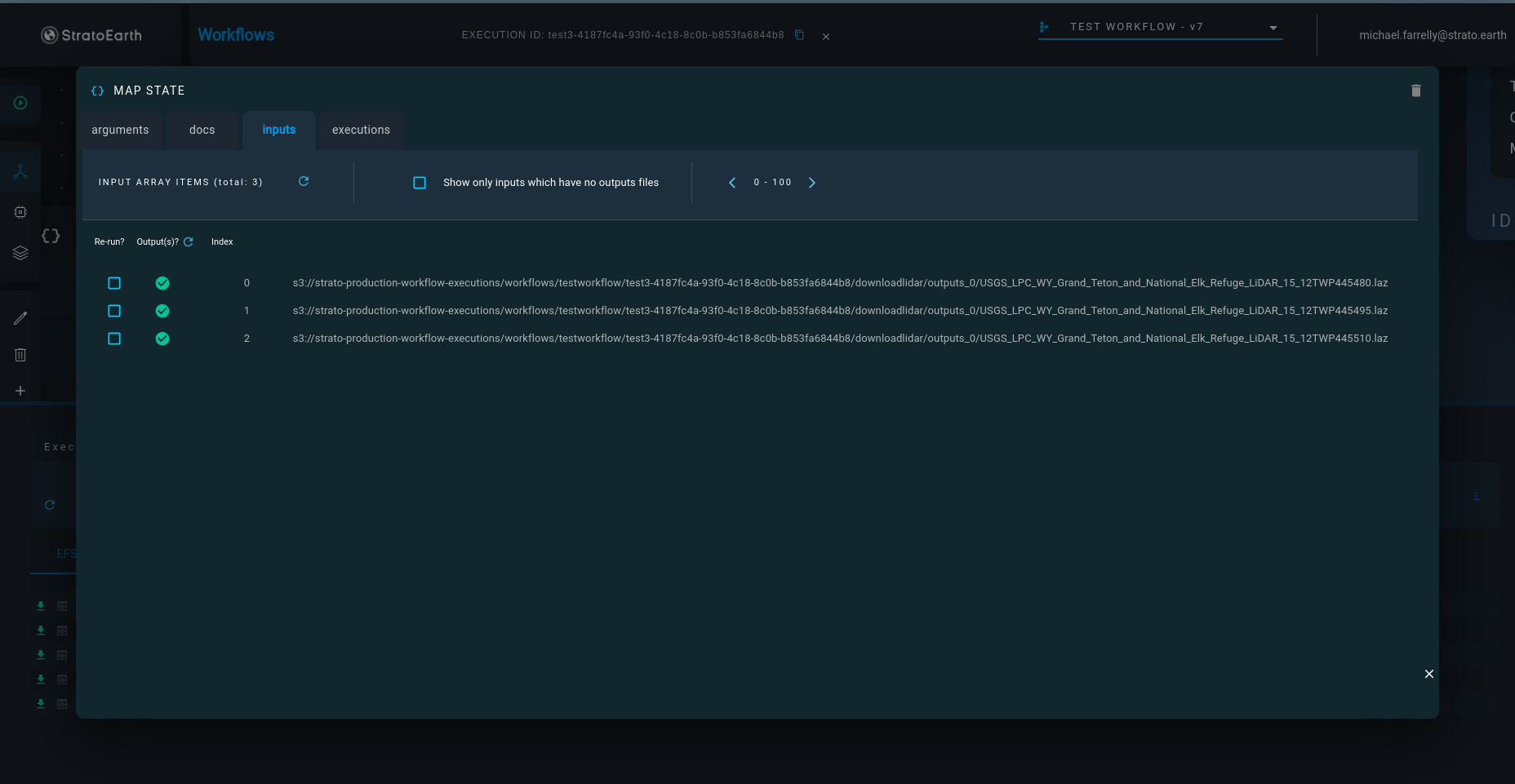

The workflows can be executed in the dashboard, where users can track execution progress in real time, and inspect logs and outputs.

Create as many dashboard user accounts as necessary for your team.

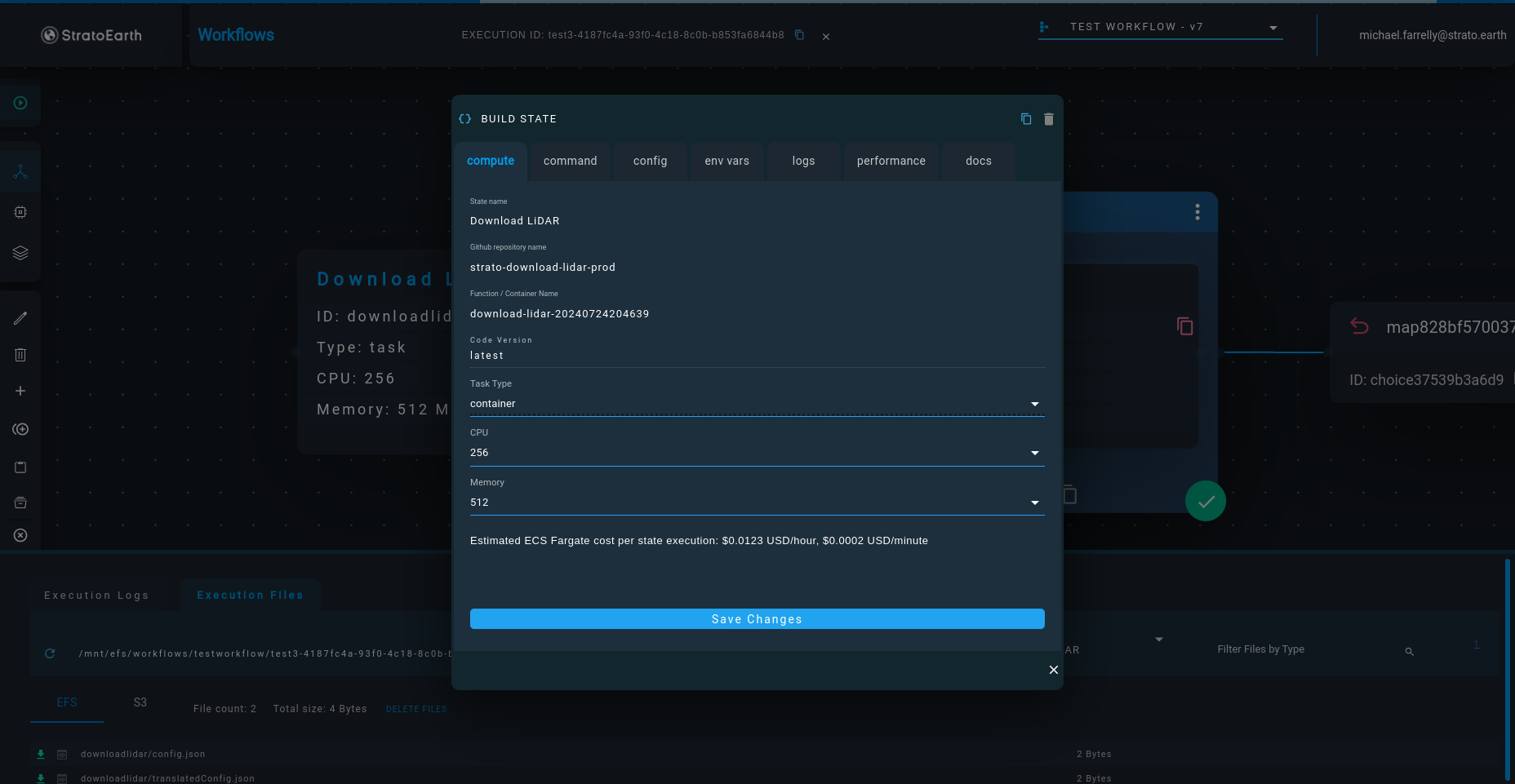

After the Github Integration pushes a Docker Image of your repository to AWS ECR, the image is available in the dashboard. The image can be configured to run as a serverless compute instance via AWS ECS Fargate. Simply select the desired amount of RAM and CPU, and an ECS Fargate task is generated within a minute.

The ECS Fargate task can then be added as a state in a workflow. The internal workflow engine is AWS Step Functions. As the user positions and connects different states in the dashboard, complex Amazon States Language (ASL) code is generated under the hood, and the underlying AWS Step Functions state machine is automatically updated.

Users have access to the raw ASL generated by Strato Workflows. Workflows ASL can be exported, stored in version control such as Github, and even imported directly to AWS Step Functions, which means there is no "vendor lock" for Strato Workflows users.

A set of simple environment variables are automatically passed to the Docker containers running in ECS Fargate, which indicate the correct EFS path or S3 bucket and key for the inputs and outputs of each workflow state (i.e., ECS Fargate task).

Output data may be previewed directly in the dashboard, or downloaded with a click.

Strato Earth offers a dedicated Slack channel for each client in order to promptly resolve any issues or questions that may arise.

Bug fixes are provided free of charge. Custom feature requests, once approved, are billed at a discounted consulting rate, as are consulting services for code refactoring.

Strato Workflows documentation is available at strato.earth/docs.

Project type: Strato Workflows, Strato Maps

Using only scene statistics without ancillary data inputs, ARSI's RESOLV™ software allows small sat companies to achieve near real-time atmospheric correction of satellite imagery, so that the imagery can be applied to time-sensitive use cases.

ARSI needed a cloud-based platform to automate their atmospheric correction process and provide live access to clients. For demo purposes, a web interface was also required so that users could select imagery for atmospheric correction, and download the corrected files.

ARSI was able to automate and provide live access to their Resolv software within a matter of days via the Strato Workflows Github integration and drag-and-drop UI. Strato Workflows was launched in ARSI's own Amazon Web Services account, so that their proprietary algorithm was not exposed outside of their own version control and cloud accounts. As ARSI implements new versions of the Resolv software tailored to the technical specifications of different small sat providers, ARSI scientists can rapidly spin up new cloud-based automated workflows via Strato Workflows in a matter of hours and without hiring a devops team.

Strato Maps, hosted in ARSI's own cloud account and at a domain name of their choosing, was integrated with Strato workflows to provide the demo web interface where users can select images for atmospheric correction via the Resolv software.

Project type: Strato Workflows

Tesera’s High Resolution Inventory Solutions (HRIS) deploys advanced machine learning and data analytics to produce reliable forestry and natural resource inventories. HRIS is a blend of area-based modelling (using LiDAR, multispectral imagery, ground plot data, complemented with terrain and climate data) and individual tree crown delineation to provide a more reliable, scalable and consistent forest inventory.

Tesera needed to automate their HRIS data processing algorithms to run at scale against big data in the cloud. The solution needed to allow for re-combination of the algorithms into a series of differentiated workflows. Tesera's own forestry experts and data scientists would need to be able to build and iterate on the workflows directly, as only they have the required expertise to combine their algorithms into intricate automated workflows, and evaluate the output datasets.

Tesera opted to use Strato Workflows to achieve automation. Strato Workflows' Github integration allowed for Tesera's existing Docker images to be re-combined into cloud-based workflows with only light modification. Tesera's experts were able to use the Strato Workflows drag-and-drop UI to rapidly combine their algorithms into a series of workflows, with easy configuration of parallel processing nodes so that thousands of input files could be processed concurrently by the same algorithm.

The workflow duplication feature has allowed Tesera data scientists to instantly create duplicates of their complex workflows, which can then be easily modified into variant workflows.